World Leaders and Tech Titans Converge on New Delhi as AI’s Promise and Peril Take Center Stage

World Leaders and Tech Titans Converge on New Delhi as AI’s Promise and Peril Take Center Stage

The humid February air in New Delhi carries more than the usual weight of anticipation this week. As delegates from over 20 nations stream into the city’s sprawling convention center, the fourth annual AI Impact Summit opens not with triumphant proclamations about technological revolution, but with something far more telling: a palpable sense of unease masked by diplomatic pleasantries.

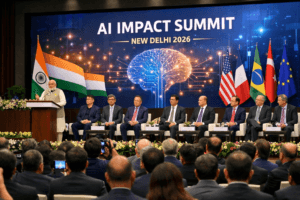

Indian Prime Minister Narendra Modi inaugurated the five-day gathering on Monday, standing before a sea of faces that includes OpenAI’s Sam Altman, Google’s Sundar Pichai, and leaders from France, Brazil, and dozens of other nations. The venue buzzes with the particular energy of an event where the stakes keep rising faster than anyone can reasonably address them.

“Further proof that our country is progressing rapidly in the field of science and technology,” Modi posted on X, projecting the confidence of a host nation eager to claim its place in the AI hierarchy. But beneath the polished surface of panel discussions and bilateral meetings, the conference grapples with questions that have no easy answers—questions about jobs, about safety, about energy, and ultimately about whether humanity can maintain control of something it has unleashed with breathtaking speed.

The Developing World Takes the Microphone

For the first time, this annual gathering finds its home in a developing nation, and the shift in venue signals something important about how the AI conversation is evolving. Previous summits in Bletchley Park, Seoul, and Paris carried the unmistakable imprint of Western technological dominance. New Delhi changes the calculus.

India’s IT ministry frames this as a moment when the global South can finally shape the agenda: “The summit will shape a shared vision for AI that truly serves the many, not just the few.” It’s a carefully chosen phrase that acknowledges what delegates from developing economies have long argued—that AI’s benefits and risks distribute unevenly across the global map.

Walking through the conference halls, you can see this tension playing out in real time. Panels explore how AI might address India’s notoriously dangerous roads, where nearly 150,000 people die annually in traffic accidents. Others examine how South Asian women interact with technology, probing whether AI tools reflect their realities or simply import biases from wealthier, whiter contexts.

Dr. Meera Chandrasekaran, a Delhi-based technology policy researcher attending the summit, puts it bluntly between sessions: “We’ve spent years watching Silicon Valley build products for markets that look nothing like ours. Now we’re supposed to absorb those products, adapt to them, and deal with the consequences. This summit matters because it’s happening here, because for once we’re asking what AI means for people who don’t live in California or London.”

The Uncomfortable Questions Nobody Wants to Answer

Yet location alone cannot resolve the fundamental tensions that have come to define every major AI gathering. Behind closed doors and during coffee breaks, delegates wrestle with five interconnected concerns that resist easy resolution.

Job displacement tops nearly everyone’s list. The International Monetary Fund projects that AI could affect nearly 40 percent of jobs globally, with advanced economies facing even higher exposure. In India, where the IT services sector employs millions and serves as a critical economic engine, the anxiety cuts deep. If AI can write code, handle customer service, and analyze data more cheaply than human workers, what happens to the next generation of Indian engineers?

A senior Indian software executive who asked not to be named sums up the industry’s ambivalence: “We’re building AI tools that will eventually make some of our own employees redundant. That’s the uncomfortable truth we don’t discuss in press releases. We talk about augmentation, about upskilling, about new opportunities. And those things matter. But if you’re a 45-year-old programmer whose skills are suddenly obsolete, those abstractions don’t pay your mortgage.”

Then there’s the question of harm—real, tangible damage that AI systems can inflict when they fail or when they’re deliberately misused. Misinformation and deepfakes have already demonstrated their power to destabilize elections, destroy reputations, and manipulate public opinion. At the Delhi summit, safety panels draw standing-room-only crowds as researchers present findings on everything from algorithmic bias to the weaponization of generative tools.

Kelly Forbes, director of the AI Asia Pacific Institute, strikes a cautiously optimistic note amid the warnings. Her organization researches how countries like Australia are forcing platforms to address minors’ safety online. “There is real scope for change,” she says. But she acknowledges the pace of reform lags dangerously behind the technology’s evolution. Children remain exposed to AI-generated content that can exploit, manipulate, and harm them—sometimes before regulators even understand what’s possible.

The Energy Elephant in the Room

Perhaps the most physically tangible concern at the summit has nothing to do with ones and zeros. AI consumes electricity on a staggering scale. Training large language models requires data centers that guzzle power and water, leaving carbon footprints that complicate every corporate sustainability report.

India, like much of the developing world, faces an excruciating trade-off. Economic growth demands energy. AI development demands even more. Yet climate commitments cannot be abandoned without catastrophic consequences. The tension plays out in hallway conversations between tech executives eager to expand their operations and environmental advocates who warn that AI’s environmental costs fall heaviest on communities already bearing the brunt of climate change.

A European delegate involved in energy policy negotiations at the summit describes the challenge: “We’re asking developing countries to leapfrog fossil fuels while simultaneously demanding they build out massive computing infrastructure. The math doesn’t work unless we fundamentally rethink how AI systems are designed and powered. That conversation is happening here, but it’s not happening loudly enough.”

Regulation’s Impossible Balancing Act

The question of how—or whether—to regulate AI has haunted every summit since Bletchley, and Delhi proves no exception. The gathering’s stated goal of producing a “shared roadmap for global AI governance” runs directly into the reality that major players cannot agree on fundamentals.

At last year’s Paris summit, dozens of nations signed a statement calling for “open” and “ethical” AI regulation. The United States declined, with Vice President JD Vance warning that “excessive regulation could kill a transformative sector just as it’s taking off.” That divide persists in Delhi, complicated further by China’s absence from key consensus documents and the European Union’s push for comprehensive rules that many in the industry consider overly restrictive.

Amba Kak, co-executive director of the AI Now Institute, offers a sobering assessment of what these gatherings actually accomplish. “Even the much-touted industry voluntary commitments made at these events have largely been narrow ‘self regulatory’ frameworks that position AI companies to continue to grade their own homework,” she tells reporters on the summit’s sidelines.

Her critique resonates with delegates from countries that lack the bargaining power to impose their own rules. When Microsoft and Google dominate the conversation, when OpenAI’s Sam Altman commands a roomful of journalists while smaller nations struggle for attention, the idea of genuinely democratic AI governance feels increasingly aspirational.

Existential Questions and Whistleblowers

And then there’s the final concern—the one that sounds like science fiction until you hear it from people who build the technology. Existential risk. The possibility that AI systems might someday exceed human capabilities in ways that prove impossible to control.

OpenAI and Anthropic, two of the industry’s most prominent players, have both seen public resignations from staff members who warned that their employers were moving too fast, cutting too many corners. Anthropic disclosed last week that its latest chatbot models could be nudged toward “knowingly supporting—in small ways—efforts toward chemical weapon development and other heinous crimes.” The admission landed like a grenade in an industry already struggling with credibility.

Eliezer Yudkowsky, author of the starkly titled 2025 book “If Anyone Builds It, Everyone Dies: Why Superhuman AI Would Kill Us All,” has compared AI development to the creation of nuclear weapons—a technology whose dangers were understood too late. At Delhi, references to Yudkowsky’s work surface in breakout sessions and private dinners, a sign that even mainstream delegates take the existential question more seriously than public statements suggest.

India’s Moment, India’s Ambitions

For Modi’s government, the summit represents an opportunity to showcase India’s technological emergence. Stanford University researchers recently ranked India third globally in AI competitiveness, leapfrogging South Korea and Japan. The numbers tell a story of rapid progress fueled by massive investments in infrastructure and education.

Yet experts caution against reading too much into the rankings. India’s AI ecosystem remains heavily dependent on servicing Western companies rather than developing original technologies. The country produces excellent engineers, but it does not yet produce foundation models that compete with OpenAI’s GPT series or Google’s Gemini. The gap between ambition and reality yawns wide.

A mid-career researcher from Bangalore attending the summit captures the frustration: “We’re hosting the world, which is wonderful. But ask yourself how many Indian companies are actually shaping the future of AI. The answer is very few. We’re consumers of technology, not creators. Until that changes, summits like this risk becoming elaborate performances rather than genuine moments of transformation.”

What Progress Actually Looks Like

Despite the skepticism, moments of genuine engagement punctuate the conference. In a windowless conference room on the convention center’s second floor, representatives from a dozen countries huddle around a table, hammering out language for a proposed agreement on AI transparency. The negotiations move slowly, punctuated by translation delays and competing national interests. But they move.

Down the hall, a panel on AI and labor draws a standing-room crowd of workers, union representatives, and technology ethicists. The conversation turns surprisingly practical: how to negotiate collective bargaining agreements that protect workers from algorithmic management, how to ensure that productivity gains translate into better wages rather than simply higher profits.

These are not the stuff of headlines. They will not generate the breathless coverage that follows Sam Altman through the venue. But they represent something perhaps more important—the slow, difficult work of building institutions that can actually govern a technology that refuses to wait for human consensus.

The Long Road Ahead

As the Delhi summit continues through the week, delegates will finalize statements, sign agreements, and pose for photographs that will circulate across social media. Modi will host dinners. CEOs will announce partnerships. The machinery of international diplomacy will grind forward, producing documents that may or may not survive contact with reality.

What happens after the delegates fly home matters more. Whether countries actually implement the principles they endorse. Whether companies honor the commitments they make. Whether workers see protections or simply more fine print.

The technology, meanwhile, will not pause. Somewhere in California, another model trains on another dataset, growing more capable, more powerful, more impossible to ignore. The gap between what AI can do and what we have decided to allow widens with each passing day.

Walking out of the convention center into Delhi’s chaotic streets, past vendors selling chai and auto-rickshaws negotiating impossible traffic, the abstraction of AI governance collides with the reality of how most people actually live. They do not care about existential risk or frontier models. They care about whether their jobs will exist next year, whether their children will be safe online, whether the technology that promises so much will deliver anything at all.

That is the audience the summit must ultimately address. Not the CEOs in their tailored suits or the ministers in their official cars. But the billions of people who will live with whatever decisions emerge from these five days in February, in a city that represents both the promise of technological leapfrogging and the peril of being left behind.

The AI Impact Summit will declare its shared roadmap. The question—as always—is whether anyone will follow it.

You must be logged in to post a comment.