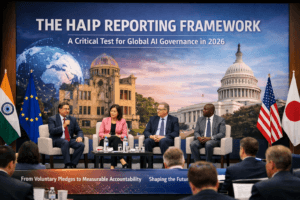

The HAIP Reporting Framework: A Critical Test for Global AI Governance in 2026

The upcoming Brookings Institution and Center for Democracy and Technology discussion on January 22, 2026, serves as a critical precursor to the India AI Impact Summit, focusing on evaluating the first results of the voluntary Hiroshima AI Process (HAIP) Reporting Framework. Established by the OECD, this first-of-its-kind mechanism aims to standardize transparency and risk mitigation reports from AI developers. Initial reports from 19 organizations reveal promising trends in governance and risk management but also expose significant challenges, including inconsistent disclosure practices, the inherent limitations of a voluntary system, and the urgent need for the framework to evolve alongside rapid technological advances and binding regulations like the EU AI Act. The event will stress-test the framework’s viability, exploring whether such voluntary transparency can effectively complement global regulatory efforts and build the international trust necessary for responsible AI governance.

The HAIP Reporting Framework: A Critical Test for Global AI Governance in 2026

From Voluntary Pledges to Measurable Accountability: How the Hiroshima AI Process is Shaping Our AI Future

As artificial intelligence systems become woven into the fabric of global economies, a pivotal test for international cooperation is unfolding. On January 22, 2026, experts from the Brookings Institution and the Center for Democracy and Technology will host a landmark public discussion in Washington, D.C., centered on the Hiroshima AI Process (HAIP) Reporting Framework. This event, staged in the lead-up to the India AI Impact Summit, arrives at a critical juncture: the world’s first global, voluntary AI transparency mechanism is moving from its pilot phase into a defining period of practical implementation and scrutiny.

The discussion will assess whether this novel framework can truly foster the transparency and trust it promises or if it will highlight the stubborn challenges of governing a technology that respects no borders.

The Genesis of a Global Framework

The HAIP Reporting Framework is the operational outcome of a political commitment made by G7 leaders in Hiroshima in May 2023. In response to the explosive proliferation of generative AI, these nations launched the Hiroshima AI Process, aiming to create a forum for collaboration on pressing governance challenges. This process culminated in an International Code of Conduct—a set of 11 voluntary actions for organizations developing advanced AI systems.

However, a code of conduct without a mechanism for accountability risks becoming merely aspirational. In February 2025, at the request of the G7, the Organisation for Economic Co-operation and Development (OECD) formally launched the HAIP Reporting Framework. Its mission is clear: to provide a standardized, voluntary mechanism for organizations to report on their risk mitigation practices, thereby facilitating cross-organizational comparability and identifying global best practices.

The framework quickly garnered significant industry buy-in. Leading developers, including Amazon, Anthropic, Google, Microsoft, and OpenAI, pledged to complete the inaugural reporting cycle, with the first round of reports submitted and published by the OECD in April 2025.

Inside the First Wave of Reports: Key Insights and Divergences

An initial analysis of the 19 organizational reports submitted provides the first concrete data on how the global AI industry is interpreting and implementing responsible AI principles. The OECD’s early insights reveal both promising trends and areas requiring urgent attention.

The following table summarizes the key findings from the first reporting cycle across the framework’s core pillars:

| Framework Pillar | Key Trends from Early Reports | Notable Gaps & Variations |

| Risk Identification & Evaluation | Use of diverse methods: technical assessments, adversarial testing (red/purple teaming), stakeholder engagement. | Larger firms focus on systemic risks (e.g., societal bias); smaller entities emphasize sector-specific concerns. |

| Risk Management & Security | Multi-layered strategies are universal, combining technical safeguards, procedural controls, and real-time monitoring. | Practices aligned with standards like ISO or NIST are common, but depth of implementation varies. |

| Transparency Practices | Consumer-facing companies often publish model cards and disclosures; B2B firms share info privately with clients. | Training data transparency remains highly inconsistent, especially among B2B providers. |

| Content Authentication | User notifications via disclaimers are widespread. | Technical tools (watermarking, cryptographic credentials) are in early stages, led by a few large tech firms. |

| Investment in Safety & Public Good | Increased R&D in safety, fairness, and interpretability; projects linked to UN Sustainable Development Goals. | Scope and scale of “AI for good” initiatives vary significantly based on organizational size and mission. |

A standout finding is the industry’s proactive, albeit varied, approach to organizational governance. Most participants reported incorporating AI risk into their overall enterprise risk management systems or establishing dedicated AI governance frameworks, some with oversight at the board level. This indicates that the framework is catalyzing internal structural changes, moving responsibility from isolated engineering teams to the highest levels of corporate leadership.

The Brookings Event: A Multistakeholder Stress Test

The upcoming Brookings-CDT discussion is designed to be a comprehensive stress test of this nascent system. The agenda moves from high-level political vision to granular technical detail.

The session will open with remarks from Indian Ambassador H.E. Vinay Mohan Kwatra, immediately framing the HAIP within the broader, high-stakes context of the Global South’s priorities for trustworthy and equitable AI. This is significant, as India—a rising AI power—will host its own AI Impact Summit, making this event a crucial diplomatic and intellectual precursor.

The core of the morning will be a presentation of a new analytical report by Brookings and CDT experts, assessing the framework’s impact on transparency and pinpointing areas for improvement. This will be followed by a multi-stakeholder panel featuring government officials, multilateral representatives, and civil society. Their task is to debate the practical lessons from the first reporting cycle and explore how voluntary mechanisms can complement—rather than complicate—emerging binding regulations like the EU AI Act.

A subsequent industry panel will provide a critical reality check. With representatives from Microsoft, Google, Adobe, and others, it will explore the tangible value—and burdens—of transparency reporting from a corporate perspective. The event will conclude with a timely fireside chat on the U.S. National Institute of Standards and Technology (NIST) and its “Zero Drafts” pilot project. This initiative seeks to accelerate the creation of standards for AI model and dataset documentation, representing a vital technical counterpart to the HAIP’s policy-focused reporting.

Challenges on the Road to Credibility

Despite its promising start, the HAIP framework faces steep challenges that the Brookings conversation will undoubtedly highlight.

The Voluntary Participation Dilemma: The framework’s strength—its voluntary nature—is also its greatest vulnerability. Without widespread adoption, particularly from influential frontier model developers, its ability to set meaningful norms is limited. The current cohort of 19 organizations, while prestigious, is just a fraction of the global ecosystem.

The Consistency and Comparability Gap: Early reports show a wide spectrum of disclosure depth. For instance, practices around training data transparency—a cornerstone for assessing bias and provenance—remain “inconsistent”. Without stricter guidance or standardized metrics, comparing one organization’s “robust” risk management to another’s becomes subjective, undermining the framework’s core goal of comparability.

The Pace of Technological Change: AI capabilities are advancing faster than governance cycles. Participants have already suggested the framework needs annual updates to stay relevant to new risks and practices. A static reporting template could quickly become obsolete.

Interoperability with Binding Law: As noted by industry experts, 2026 marks the first major enforcement cycle of the EU AI Act, which imposes stringent mandatory requirements for high-risk AI systems. A key question for the HAIP’s future is how this voluntary, global framework will interact with regional, binding regulations to avoid creating conflicting or redundant burdens for international companies.

The Path Forward: From Reporting to Robust Governance

For the HAIP framework to evolve from a transparency exercise into a pillar of global AI governance, several steps are critical, many of which will be debated at the Brookings forum.

- From Voluntary to Expected: The next phase may involve “soft law” pressures, such as making HAIP reporting a condition for government procurement contracts or investor due diligence, elevating its status without formal legislation.

- Technical Standardization: Initiatives like NIST’s Zero Drafts project are essential to provide the technical underpinnings. Clear standards for what constitutes adequate model documentation, effective watermarking, or a comprehensive risk assessment will bring rigor to the qualitative reports.

- Tailored Support and Peer Learning: As suggested by participants, the OECD could develop role-specific modules for different actors in the AI value chain and create facilitated forums for sharing best practices, building a true community of practice.

- Linking to Global Priorities: The framework’s section on “Advancing Human and Global Interests” has shown promise, with organizations linking projects to climate action and public health. Strengthening this pillar can align corporate AI development with international priorities like the UN Sustainable Development Goals, building broader political support for the process.

The discussion in Washington is more than a policy seminar; it is a real-time calibration of a daring global experiment. It asks whether a coalition of democratic nations, technologists, and civil society can collaboratively build the guardrails for a technology that is reshaping human society. The insights generated and the direction set on January 22 will not only inform the agenda of the India AI Impact Summit but will also send a powerful signal about the world’s capacity to govern innovation with wisdom, cooperation, and a steadfast commitment to the public good. The road to trustworthy AI is being paved now, and the HAIP Reporting Framework is one of its most important, and closely watched, early blueprints.

You must be logged in to post a comment.