Beyond the Algorithm: How India’s Push for Ethical AI Could Redefine Digital Citizenship

The India AI Impact Summit 2026 confronts the critical juncture where artificial intelligence transitions from a tool into foundational public infrastructure, with a central panel emphasizing that without deliberate ethical design, these systems risk amplifying societal exclusion rather than fostering equity. The discussion, led by experts like Dr. Bhavani Rao R., underscores that preventing “mass exclusion” requires a paradigm shift from reactive audits to proactive, community-centered co-creation, where diverse lived experiences inform AI development from the ground up. This involves overhauling biased data practices, embedding algorithmic transparency, and establishing robust accountability mechanisms to ensure AI serves as a trusted public good. Ultimately, the summit positions India’s unique scale and diversity as a potential blueprint for the world, arguing that building equitable digital futures is not a technical afterthought but a fundamental governance imperative for any nation integrating AI into its public core.

Beyond the Algorithm: How India’s Push for Ethical AI Could Redefine Digital Citizenship

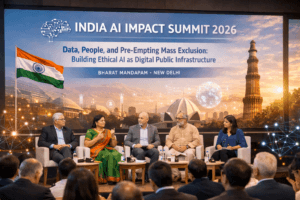

As dawn breaks over Bharat Mandapam on February 17, 2026, a conversation will unfold that may well shape the digital destiny of over a billion people. The India AI Impact Summit 2026 isn’t merely another tech conference; it is a watershed moment where the nation grapples with a fundamental question: In our rush to build intelligent systems, are we architecting a future of empowerment or cementing a new era of systemic exclusion?

The panel session, “Data, People, and Pre-Empting Mass Exclusion: Building Ethical AI as Digital Public Infrastructure,” featuring thought leaders like Dr. Bhavani Rao R., moves beyond theoretical ethics. It confronts the practical, urgent reality that AI is no longer a futuristic concept but the very bedrock of emerging public infrastructure. This shift demands a parallel evolution in our design philosophy—from viewing AI as a mere efficiency tool to recognizing it as a determinant of social equity.

The Stakes: When Public Infrastructure Inherits Private Bias

Imagine a public healthcare AI, trained predominantly on urban hospital data, that underestimates disease prevalence in rural populations. Or an agricultural subsidy allocation model that overlooks small-holder farmers because its data sources favor large, digitally-tracked landholdings. These aren’t dystopian fantasies; they are imminent risks as India integrates AI into services from law enforcement and tax collection to welfare distribution and education.

The core challenge, which the summit panel will dissect, is triple-faceted:

- The Data Chasm: AI systems learn from historical data, which is often a mirror to our past inequalities. Biases in gender, caste, socioeconomic status, and geography are not anomalies in this data; they are frequently its defining features. An AI trained on such data doesn’t just replicate bias—it can amplify it at a terrifying scale and speed.

- The Design Blind Spot: When development teams lack diversity, they inherently lack the lived experience to foresee how a system might fail for the marginalized. A voice-recognition system struggling with regional accents, or a facial analysis tool failing on darker skin tones, are classic examples of exclusion by design.

- The Accountability Vacuum: Who is responsible when an AI-driven welfare system wrongfully denies benefits? The programmer, the implementing agency, the data supplier, or the algorithm itself? Without robust, legally-grounded accountability frameworks and transparent oversight, harmful outcomes become grievances without recourse.

From Reactive Fixes to Proactive Foundations: The “Ethical by Design” Imperative

The session’s title, “Pre-Empting Mass Exclusion,” is a call for a paradigm shift. It advocates moving from a model of auditing and correcting biased AI after deployment, to one where ethical principles are foundational components, baked into the system’s architecture from the first line of code.

What might this look like in practice?

- Community-Centered Co-Creation: This involves moving beyond token user testing to deeply participatory design. It means engaging with women’s self-help groups, rural farming cooperatives, and urban informal workers not as mere beneficiaries, but as co-architects. Their lived experience becomes critical data, shaping the problem statement and validating solutions. Dr. Bhavani Rao’s extensive work in gender equality and community empowerment through AMMACHI Labs provides a vital blueprint for this approach.

- Inclusive Data Governance: We need new models for data sovereignty and stewardship. This could involve federated learning, where AI models are trained across decentralized data sources without the raw data ever leaving the community, thus preserving privacy and context. It also mandates aggressive data diversification—actively seeking and valuing data from the margins to balance the historical over-representation of privileged groups.

- Algorithmic Transparency and Redress: Ethical AI must be explainable, especially in the public sphere. Citizens have a right to understand why a decision affecting them was made. This requires developing “right to explanation” protocols and establishing independent, multidisciplinary audit bodies capable of interrogating these complex systems. The redress mechanism must be as robust as the technology itself.

The Global Lens: India’s Opportunity to Lead

The world is watching. India’s scale, demographic diversity, and proven capability in building groundbreaking digital public infrastructure (like Aadhaar and UPI) present a unique testbed. If India can pioneer a framework for ethical AI that works here—navigating complexities of language, literacy, and deep-seated social stratification—it will offer a replicable model for the Global South and beyond.

This isn’t just about avoiding harm; it’s about actively harnessing AI for profound public good. When built ethically, AI-driven infrastructure can:

- Personalize public education to bridge learning gaps for first-generation learners.

- Optimize renewable energy distribution to remote communities.

- Provide predictive healthcare in areas with a chronic shortage of doctors.

- Democratize access to credit and markets for micro-entrepreneurs.

The Path Forward: A Multistakeholder Mandate

The composition of the summit panel reflects the understanding that this cannot be solved by technologists alone. Policymakers must craft agile, principle-based regulation. Civil society must act as a watchdog and bridge to grassroots communities. Academics and researchers, like those from the School of Social and Behavioural Sciences, must provide the empirical evidence and interdisciplinary frameworks. Industry holds the keys to responsible innovation.

Session Details & A Call to Conscience:

- Date: February 17, 2026

- Time: 10:30 – 11:25 AM

- Location: Meeting Room 8, Bharat Mandapam, New Delhi

- Summit Registration: https://impact.indiaai.gov.in/registration

The India AI Impact Summit 2026, through sessions like this, marks a critical inflection point. It moves the dialogue from “what AI can do” to “what AI should do.” The insights generated here must translate into actionable roadmaps, institutional mandates, and a renewed civic contract for the digital age.

In the end, building ethical AI for public good is not a technical sub-discipline; it is the essential governance challenge of our time. It is about ensuring that our digital future doesn’t hardwire the inequities of our past, but instead, consciously and deliberately, engineers a path toward greater justice, inclusivity, and human dignity. The conversation in Meeting Room 8 is where that arduous, necessary work begins.

You must be logged in to post a comment.